The 'weird events' that make machines hallucinate

Getty Images

Getty ImagesComputers can be made to see a sea turtle as a gun or hear a concerto as someone’s voice, which is raising concerns ing artificial intelligence in the real world.

The enger s the stop sign and feels a sudden surge of panic as the car he’s sitting in speeds up. He opens his mouth to shout to the driver in the front, ing – as he spots the train tearing towards them on the tracks ahead – that there is none. The train hits at 125mph, crushing the autonomous vehicle and instantly killing its occupant.

This scenario is fictitious, but it highlights a very real flaw in current artificial intelligence frameworks. Over the past few years, there have been mounting examples of machines that can be made to see or hear things that aren’t there. By introducing ‘noise’ that scrambles their recognition systems, these machines can be made to hallucinate. In a worst-case scenario, they could ‘hallucinate’ a scenario as dangerous as the one above, despite the stop sign being clearly visible to human eyes, the machine fails to recognise it.

Those working in AI describe such glitches as ‘adversarial examples’ or sometimes, more simply, as ‘weird events’.

Kevin Eykholt et al

Kevin Eykholt et al“We can think of them as inputs that we expect the network to process in one way, but the machine does something unexpected upon seeing that input,” says Anish Athalye, a computer scientist at Massachusetts Institute of Technology in Cambridge.

You might also like:

• Why machines dream of spiders with 15 legs

Seeing things

So far, most of the attention has been on visual recognition systems. Athalye himself has shown it is possible to tamper with an image of a cat so that it looks normal to our eyes but is misinterpreted as guacamole by so-called called neural networks – the machine-learning algorithms that are driving much of modern AI technology. These sorts of visual recognition systems are already being used to underpin your smartphone’s ability to tag photos of your friends without being told who they are or to identify other objects in the images on your phone.

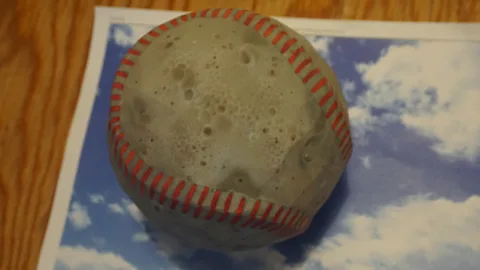

More recently, Athalye and his colleagues turned their attention to physical objects. By slightly tweaking the texture and colouring of these, the team could fool the AI into thinking they were something else. In one case a baseball that was misclassified as an espresso and in another a 3D-printed turtle was mistaken for a rifle. They were able to produce some 200 other examples of 3D-printed objects that tricked the computer in similar ways. As we begin to put robots in our homes, autonomous drones in our skies and self-driving vehicles on our streets, it starts to throw up some worrying possibilities.

“At first this started off as a curiosity,” says Athalye. “Now, however, people are looking at it as a potential security issue as these systems are increasingly being deployed in the real world.”

Take driverless cars which are currently undergoing field trials: these often rely on sophisticated deep learning neural networks to navigate and tell them what to do.

But last year, researchers demonstrated that neural networks could be tricked into misreading road ‘Stop’ signs as speed limit signs, simply through the placement of small stickers on the sign.

MIT

MITHearing voices

Neural networks aren’t the only machine learning frameworks in use, but the others also appear vulnerable to these weird events. And they aren’t limited to visual recognition systems.

“On every domain I've seen, from image classification to automatic speech recognition to translation, neural networks can be attacked to mis-classify inputs,” says Nicholas Carlini, a research scientist at Google Brain, which is developing intelligent machines. Carlini has shown how – with the addition of what sounds like a bit of scratchy background noise – a voice reading “without the dataset the article is useless” can be mistranslated as “Ok Google browse to evil dot com”. And it is not just limited to speech. In another example, an excerpt from Bach’s Cello Suit 1 transcribed as “speech can be embedded in music”.

To Carlini, such adversarial examples “conclusively prove that machine learning has not yet reached human ability even on very simple tasks”.

Under the skin

Neural networks are loosely based on how the brain processes visual information and learns from it. Imagine a young child learning what a cat is: as they encounter more and more of these creatures, they will start noticing patterns – that this blob called a cat has four legs, soft fur, two pointy ears, almond shaped eyes and a long fluffy tail. Inside the child’s visual cortex (the section of the brain that processes visual information), there are successive layers of neurons that fire in response to visual details, such as horizontal and vertical lines, enabling the child to construct a neural ‘picture’ of the world and learn from it.

Neural networks work in a similar way. Data flows through successive layers of artificial neurons until after being trained on hundreds or thousands of examples of the same thing (usually labelled by a human), the network starts to spot patterns which enable it to predict what it is viewing. The most sophisticated of these systems employ ‘deep-learning’ which means they possess more of these layers.

MIT

MITHowever, although computer scientists understand the nuts and bolts of how neural networks work, they don’t necessarily know the fine details of what’s happening when they crunch data. “We don't currently understand them well enough to, for example, explain exactly why the phenomenon of adversarial examples exists and know how to fix it,” says Athalye.

Part of the problem may relate to the nature of the tasks that existing technologies have been engineered to solve: distinguishing between images of cats and dogs, say. To do this, the technology will process numerous examples of cats and dogs, until it has enough data points to distinguish between them.

“The dominant goal of our machine learning frameworks was to achieve a good performance ‘on average’,” says Aleksander Madry, another computer scientist at MIT, who studies the reliability and security of machine learning frameworks. “When you just optimise for being good on most dog images, there will always be some dog images that will confuse you.”

One solution might be to train neural networks with more challenging examples of the thing you’re trying to teach them. This can immunise them against outliers.

“Definitely it is a step in the right direction,” says Madry. While this approach does seem to make frameworks more robust, it probably has limits as there are numerous ways you could tweak the appearance of an image or object to generate confusion.

A truly robust image classifier would replicate what ‘similarity’ means to a human: it would understand that a child’s doodle of a cat represents the same thing as a photo of a cat and a real-life moving cat. Impressive as deep learning neural networks are, they are still no match for the human brain when it comes to classifying objects, making sense of their environment or dealing with the unexpected.

If we want to develop truly intelligent machines that can function in real world scenarios, perhaps we should go back to the human brain to better understand how it solves these issues.

Binding problem

Although neural networks were inspired by the human visual cortex, there’s a growing acknowledgement that the resemblance is merely superficial. A key difference is that as well as recognising visual features such as edges or objects, our brains also encode the relationships between those features – so, this edge forms part of this object. This enables us to assign meaning to the patterns we see.

“When you or I look at a cat, we see all the features that make up cats and how they all relate to one another,” says Simon Stringer of the Oxford Foundation for Theoretical Neuroscience and Artificial Intelligence. “This ‘binding’ information is what underpins our ability to make sense of the world, and our general intelligence.”

This critical information is lost in the current generation of artificial neural networks.

“If you haven’t solved binding, you might be aware that somewhere in the scene there is a cat, but you don’t know where it is, and you don’t know what features in a scene are part of that cat,” Stringer explains.

Getty Images

Getty ImagesIn their desire to keep things simple, engineers building artificial neural frameworks have ignored several properties of real neurons – the importance of which is only beginning to become clear. Neurons communicate by sending action potentials or ‘spikes’ down the length of their bodies, which creates a time delay in their transmission. There’s also variability between individual neurons in the rate at which they transmit information – some are quick, some slow. Many neurons seem to pay close attention to the timing of the impulses they receive when deciding whether to fire themselves.

“Artificial neural networks have this property that all neurons are exactly the same, but the variety of morphologically different neurons in the brain suggests to me that this is not irrelevant,” says Jeffrey Bowers, a neuroscientist at the University of Bristol who is investigating which aspects of brain function aren’t being captured by current neural networks.

Another difference is that, whereas synthetic neural networks are based on signals moving forward through a series of layers, “in the human cortex there are as many top-down connections as there are bottom up connections”, says Stringer.

His lab develops computer simulations of the human brain to better understand how it works. When they recently tweaked their simulations to incorporate this information about the timing and organisation of real neurons, and then trained them on a series of visual images, they spotted a fundamental shift in the way their simulations processed information.

Rather than all of the neurons firing at the same time, they began to see the emergence of more complex patterns of activity, including the existence of a subgroup of artificial neurons that appeared to act like gatekeepers: they would only fire if the signals they received from related lower- and higher-level features in a visual scene arrived at the same time.

Stringer thinks that these “binding neurons” may act like the brain’s equivalent of a marriage certificate: they formalise the relationships between neurons and provide a means of fact-checking whether two signals that appear related really are related. In this way, the brain can detect whether two diagonal lines and a curved line appearing in a visual scene, for example, really represent a feature like a cat’s ear, or something entirely unrelated.

“Our hypothesis is that the feature binding representations present in the visual brain, and replicated in our biological spiking neural networks, may play an important role in contributing to the robustness of biological vision, including the recognition of objects, faces and human behaviours,” says Stringer.

Stringer’s team is now seeking evidence for the existence of such neurons in real human brains. They are also developing ‘hybrid’ neural networks that incorporate this new information to see if they produce a more robust form of machine learning.

“Whether this is what happens in the real brain is unclear at this point, but it is certainly intriguing, and highlights some interesting possibilities,” says Bowers.

One thing Stringer’s team will be testing is whether their biologically-inspired neural networks can reliably discriminate between an elderly person falling over in their home, and simply sitting down, or putting the shopping down.

“This is still a very difficult problem for today’s machine-vision algorithms, and yet the human brain can solve this effortlessly,” says Stringer. He is also collaborating with the Defence Science and Technology Laboratory at Porton Down, in Wiltshire, England, to develop a next generation, scaled-up version of his neural framework that could be applied to military problems, such as spotting enemy tanks from smart cameras mounted on autonomous drones.

Stringer’s goal is to have bestowed rat-like intelligence on a machine within 20 years. Still, he acknowledges that creating human-level intelligence may take a lifetime – maybe even longer.

Madry agrees that this neuroscience-inspired approach is interesting approach to solving the problems with current machine learning algorithms.

“It is becoming ever clearer that the way the brain works is quite different to how our existing deep learning models work,” he says. “So, this indeed might end up being a completely different path to achieving success. It is hard to say how viable it is and what the timeframe needed to achieve success here is.”

In the meantime, we may need to avoid placing too much trust in the AI-powered robots, cars and programmes that we will be increasingly exposed to. You just never know if it might be hallucinating.

If you liked this story, sign up for the weekly bbc.com features newsletter, called “If You Only Read 6 Things This Week”. A handpicked selection of stories from BBC Future, Culture, Capital, and Travel, delivered to your inbox every Friday.